Jump to the content

- {{#headlines}}

- {{title}} {{/headlines}}

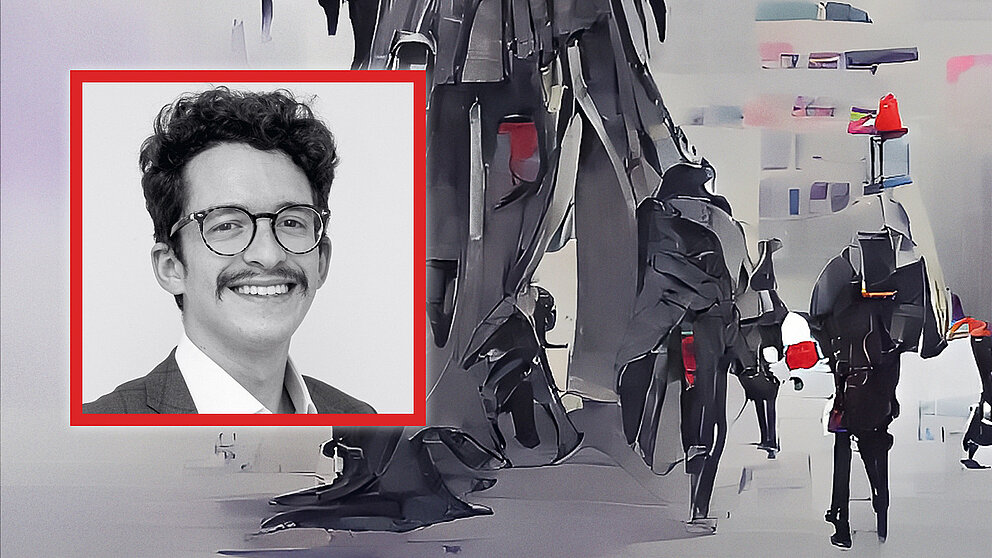

JOSÉ RENATO LARANJEIRA DE PEREIRA

José Renato Laranjeira De Pereira is a German Chancellor Fellow at iRights.Lab in Berlin and one of the founders of the Brazilian think tank Laboratory of Public Policy and Internet (LAPIN). In 2021, he took part in a Humboldt Communication Lab.

Artificial intelligence is a powerful instrument in the hands of digital service providers. It feeds users customised advertising, provides people on social networks with information tailored to their interests and accurately calculates personal preferences, such as someone’s political persuasion or sexual orientation.

“This is a huge benefit to business, but there are dangers for users,” says the Brazilian legal scholar, José Renato Laranjeira de Pereira. “AI can promote racism, homophobia and radicalisation, partly because it reinforces long-held prejudices. For example, it can allocate black people a lower credit rating. On social networks like Facebook, Twitter and TikTok it may tend to feed us polarising content from radical parties because this is what supporters and opponents discuss and share more intensively. That increases the amount of time spent on the network – which is part of the intention because we then consume more advertising.”

At the Berlin iRights.Lab, a think tank for the challenges of digitisation, Laranjeira de Pereira works on strategies for increased transparency and user rights. “Social networks like Facebook should be open about the way their AI functions – so that even the layman can understand how it makes the respective decisions,” he says. “As a user, I should have the right to be informed why I am shown homophobic content, for instance. In a best-case scenario, this would take just a single click.”